Generative AI 3D model technology is rapidly changing how creators bring ideas from concept to reality. What once required days of manual modeling, texture generation, and mesh cleanup now happens with simple text prompts, image generation inputs, and real time outputs. In this article, we explore how generative AI can transform everything from early concept art to game ready assets, giving developers and 3D teams full control while reducing production time significantly.

What is a generative AI 3D model and why does it matter today?

A generative AI 3D model is a three dimensional object created through artificial intelligence instead of traditional modeling. Instead of drawing every polygon manually, you can describe the object using simple text, upload a reference image, or even use a photo to generate a starting point. The model is built automatically using machine learning, texture generation algorithms, and an AI generator trained on spatial relationships. This shift gives creators a quick and easy way to generate assets, experiment with ideas, and build prototypes faster than ever.

How does generative AI transform the image to 3D workflow?

Traditional methods for turning an image into a 3D model required multiple tools, manual modeling skills, and long hours of work. With generative AI 3D model pipelines, you simply upload a photo or concept image and let the platform create the initial geometry, textures, and style. The output generated can be refined using manual editing, pbr textures, lighting adjustments, or mesh fixes. This hybrid workflow blends automation with full control, making it easier for creators to explore ideas without recreating entire scenes from scratch.

Why are text prompts becoming a game changer for 3D creators?

The rise of text prompts allows developers to create 3D models using simple text descriptions, similar to how image generation tools work. You type into a text box an idea like “a wooden treasure chest with iron details” and the AI handles the generation. Game developers and 3D artists already use text to 3D tools to explore variations, test themes, and prototype objects quickly. It eliminates blank canvas paralysis, helping teams move from concept to digital object with incredible speed.

What role does machine learning play in improving model quality?

Machine learning powers most modern generative AI systems. It analyzes thousands of patterns across textures, style, geometry, and spatial relationships. This training allows AI 3D model generators to produce complex shapes, remove mesh issues, and generate textures that feel realistic. ML also powers improvements in texture generation, output consistency, and industry standard formats. As the model learns more from user feedback and refinements, it becomes a more reliable tool for creators across games, animation, virtual reality, and product design.

How do generative AI tools support game developers and real time engines?

Generative AI 3D model tools are increasingly compatible with game engines like Unreal Engine or Unity. They produce assets that are closer to game ready, reducing the gap between concept art and implementation. Developers can also export files in industry standard formats like GLB, FBX, or OBJ. Combined with pbr textures and automated texture generation, creators can move assets directly into games, prototypes, or interactions without losing details or needing extensive conversion. This enhances both speed and collaboration across teams.

Can generative AI help fix mesh issues and technical limitations?

Many generative AI tools now include features to detect and repair problematic geometry. Fix mesh issues often requires deep knowledge of topology, pivot points, and structural balance. With AI assisted cleanup, creators can focus on creativity while the platform handles surface imperfections, smoothing, or edge corrections. It is especially useful for rapid prototyping, where the AI 3D model must be functional enough for testing without requiring long repair sessions.

How does texture generation work within generative 3D pipelines?

Texture generation powered by AI takes the base geometry and builds detailed surface information. From wood grains to metallic reflections, the AI produces realistic pbr textures based on your text prompts or reference image. This ensures models feel alive, textured, and ready for rendering, animation, or virtual reality. It also removes the need for external tools to craft sophisticated materials, improving speed and consistency across projects.

What are the advantages of using a web platform for generative 3D creation?

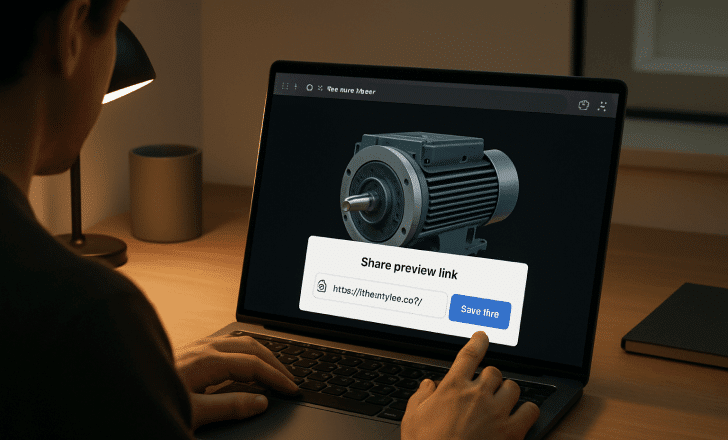

Web based platforms like RealityMAX eliminate the need for heavy installations or complex setups. Everything happens in the browser, and creators can generate, edit, and share 3D scenes in one place. This supports seamless collaboration across teams, allowing developers, designers, and clients to interact with scenes in real time. A web platform also prevents unauthorized use of files by offering secure viewing links instead of raw downloads, protecting your work while still enabling feedback.

How does generative AI integrate with virtual reality workflows?

Generative AI 3D model tools accelerate VR content creation by making it easier to produce objects, environments, and textures at scale. Once a model is generated, creators can use VR previews to test scale, proportion, and interactivity. This reduces the back and forth between modeling programs and VR engines. Whether building training simulations or immersive storytelling experiences, creators now have a faster way to populate scenes with consistent, high quality models.

Does generative AI remove the need for manual modeling?

Generative AI does not replace manual modeling, but it transforms how and when creators use it. Manual editing is still essential for refining shapes, adjusting pivot points, optimizing poly counts, or preparing assets for final production. Instead of spending hours on foundational shapes, teams use AI 3D to generate a solid base. Then, artists shape the results with artistic precision. AI becomes the assistant that helps reduce repetitive work, letting creators focus on style, storytelling, and polish.

What types of creators benefit the most from generative AI 3D models?

Game developers, product designers, content creators, and animators benefit greatly from generative AI 3D model pipelines. Developers get faster prototyping. Designers visualize concepts from simple text descriptions. Animators quickly build objects for scenes. Even beginners using free plans or basic tools can generate models without needing deep technical knowledge. Entire teams gain more speed, flexibility, and creative freedom.

Can generative AI help prevent unauthorized use of 3D files?

Generative 3D platforms that operate on the web can protect files by limiting raw downloads. Instead of sending industry standard formats to external collaborators, you can share viewing links that maintain access control. This reduces the risk of unauthorized use while still promoting seamless collaboration. In RealityMAX, for example, you can share an interactive 3D scene where stakeholders can rotate, zoom, or explore without receiving the original model files.

How does generative AI speed up 3D video and animation workflows?

AI generated 3D models can be used immediately for animation, video previews, or marketing content. By producing models with proper topology and texture depth, creators remove multiple steps from the animation pipeline. Early scene building becomes almost instant, which helps teams test motion, lighting, and overall visual direction. This speed is especially valuable in environments where deadlines are tight or concepts must be iterated in real time.

How does generative AI compare to traditional modeling methods?

Traditional modeling requires manual geometry creation, UV unwrapping, texture painting, and constant iteration. Generative AI flips this process: the base model, textures, and details appear instantly based on an image, text description, or reference image. Artists then refine, edit, or enhance the results. This hybrid method saves time and produces more consistent outputs while still allowing for creative nuance. The biggest advantage is speed without sacrificing control.

What does seamless collaboration look like with generative AI?

Generative AI models created on a collaborative platform allow every team member to access the same scene in real time. Feedback becomes faster, and iterations happen directly in the browser. Developers can test how assets look in different lighting conditions. Designers experiment with materials. Clients review objects without exporting files. This builds a production environment where creativity moves forward without blockers.

What are the limitations of generative AI 3D tools today?

While generative AI offers incredible speed, some limitations still exist. AI generated meshes may require cleanup. Stylized outputs may not always match specific art direction. Machine learning models sometimes misinterpret text prompts with abstract wording. For professional game ready workflows, manual modeling and texture painting remain important. Generative AI accelerates the process, but artistic judgment continues to define final quality.

How can creators maintain control over style and direction with AI?

Control comes through iteration. By refining text prompts, uploading multiple reference images, or adjusting textures manually, creators ensure that generative AI matches their visual intent. Modern tools allow you to override default materials, fix mesh issues, re texture models, or reshape geometry. You are not limited by what the AI generates. Instead, it becomes a foundation that you expand through your skills and vision.

What does the future hold for generative AI 3D model workflows?

The future points toward real time generation, higher fidelity textures, better topology, and AI powered animation. We will see more advanced integrations with game engines, expanded use in video production, and more accessible tools for beginners. As artificial intelligence grows, generative AI 3D model workflows will become the fastest way to build worlds, objects, scenes, and interactive experiences. It will give creators more freedom and less friction across every stage of development.